Making Machines Understand You

In this post, I will explain how bol.com is going to interpret what the user says using Machine Learning and Natural Language Processing.

Historically we have been doing that in multiple ways: by informing the customer on our website, in our app, in our email communication, using our chatbot Billie and in our call center.

To optimize all these forms of assistance, a good understanding of the user intent is essential.

Our automated chat interface Billie

One way in which we are going to understand the customers better is using improved intent recognition. This will help us by giving better answers in our automated chat system, both helping the customer better by giving fast access to information & actions, and saving time for human chat agents.

Below, I will explain how we are improving our customer intent recognition and show our current results.

Intent Recognition

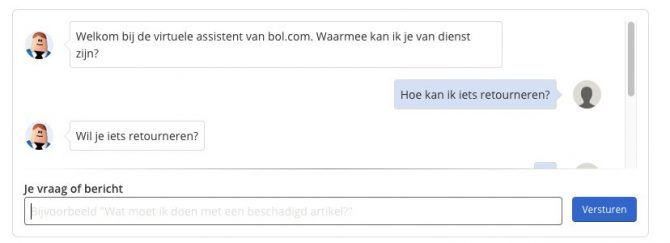

On our website through our chatbot, people can enter some free text. To be able to handle this free text input, we have to map these to a limited set of FAQs. These FAQs are categories of questions, like "How can I return a product?". We want to recognize these questions and map those to the FAQs.

Our goal is to recognize the users’ intent in different channels: for our chatbot Billie, e-mail conversations and even through voice interactions.

Visualization of Intent Recognition

We want to predict the most likely intents (questions) a user might have, mainly given some (textual) user inputs. We train a model that will predict a probability for each class (a certain topic/question).

We use the following methods from literature to train our models:

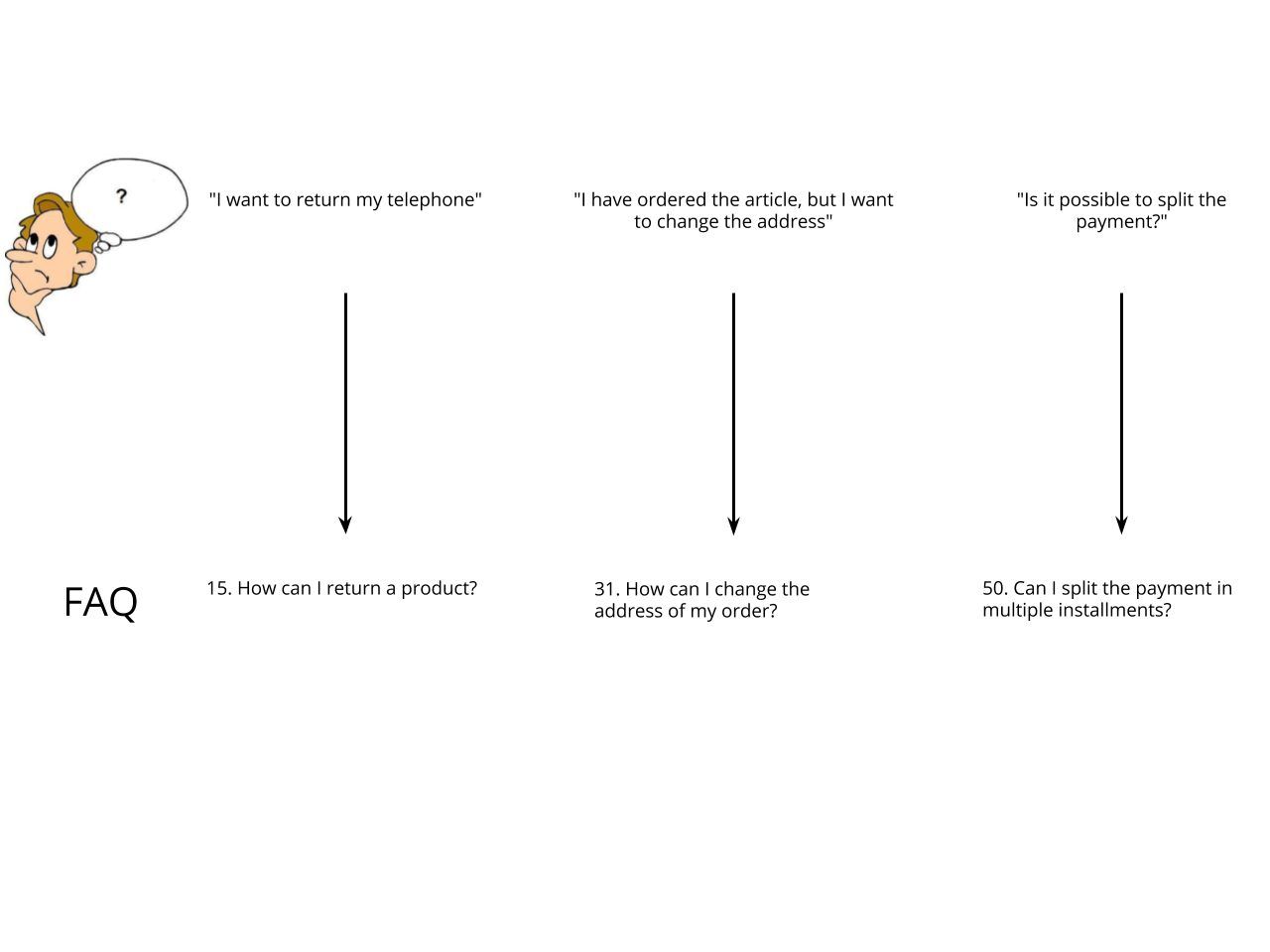

- Regularization method Dropout <1>, improving the final accuracy on unseen data

Visualization of the Dropout technique by Srivastava, Nitish, et al "Dropout: a simple way to prevent neural networks from overfitting" <1>This techniques randomly removes a set of neurons (which can be understood as intermediate features) during training for each layer in a network. This works as a regularization method: the following layers in a neural network rely less on specific individual features and must rely on multiple features. As a result, our model generalizes better: it gets higher accuracy on our validation set.

- The adaptive optimization method Adam <3>

This optimization method uses momentum and an adaptive learning rate specific for each parameter, often training faster than other methods. Another nice property is that this method often doesn't require much tuning, in contrast to more basic optimization methods like standard Stochastic Gradient Descent with momentum. A negative property of Adam is that it often generalizes less well than other optimization methods such as SGD with momentum. Some latest research highlights about optimization methods for deep learning can be found in this blog post.

- Batch Normalization <4>, a method that improves training speed and also makes the model generalize better.

Batch Normalization normalizes each neuron of a layer in a network to have zero mean and unit variance based on the statistics from a mini-batch (a small set of examples used in training, usually a power of two such as 64, 128 or 256 examples). This speeds up training and also works as a regularization method because batch statistics introduce noise during the optimization process. After training, the statistics of the mean and variance are used to normalize test examples.

For predicting the sentence using the text input only, we experimented with a few models

- Character-level Long-short term memory networks (LSTMs) <5>, a popular recurrent neural network architecture

LSTMs are popular in natural language processing applications like machine translation, speech recognition and domains involving sequences based on time like forecasting. Often word-based approaches are used because of the lower computational cost while having similar performance.

- Character-level Convolutional Neural Networks (CNNs) <2>, a model that is used a lot in computer vision, but is gaining popularity in language problems because of the computational advantages (faster training and inference) and high accuracy.

CNNs work by stacking small convolutional filters, resulting in a powerful model that can combine local features to form more global features (e.g. word-level features, combination of word-level features, etc).

- Relatively simple linear bag-of-words (BoW) models and word-level CNNs.

Word-based models first split up sentences into words and creating a dictionary which maps all the words to in`dices. Then, these word features are used as input to a model, either using one-hot encoding (representing whether a word is present in a sentence or not in vector) or by using a lookup table (word embeddings).

Results

After training on a set with 290.094 training examples (90% of the data), and validating on 10% of the examples (32.234) we selected the model that achieved the best performance, a character-level CNN. This model predicts in 76.5% of the conversations the intent of the customer given the first sentence when compared to the test set. The reason the character CNN works well can be explained by the fact that sentences are both very small and contain lots of type errors. In a word based model, when the word is not in the dictionary which we find in the training data, the word itself becomes useless. A character-based model avoids this problem: any character can be used as feature in our model, and the (stacked) convolutions make sure important character combinations are considered. This is supported by our experience: we find during testing our model in a service that its quite insensitive to type errors.

We also evaluate our model against the recognition of our chatbot on a small set of human annotated conversations. Based on our model which only receives the first sentence as input, we get the same performance as our baseline (about 66% correctly recognized) which uses one or more sentences for labeling. This is a promising result, and we are going to perform a A/B test to measure the results on chat conversations. We are going to measure on two metrics: the amount of people leaving chat and interacting with a human agent and the length of the conversation. Based on these results, we decide how we are going to use our system, and how we are going to improve it.

Conclusion & Next Steps

We will continue improving our current models by learning on new data, improving our model architectures and training algorithms and evaluate our model and systems when using them in practice.

Furthermore, we will apply machine learning, text analysis and AI systems to make our service even better and personal:

- Combining textual information from the user with contextual information. This will help to get more accurate intent recognition. Furthermore, we can provide users with relevant information, even if they don't tell us the information they want (for example as hints on our website and app).

- Automatically finding the best combination of customer and call center agents based on historical feedback, information about the agent, information about the customer and contextual information.

- Finding out the most urgent problems for customers and making this available for sellers and business people. Examples include: find out failing products, packages that do not arrive, and much more. Techniques we plan to use include: sentiment analysis, named entity recognition and unsupervised clustering.

- Improving our chatbot by handling a wider set of questions and providing a more proactive experience.

- Forecasting how much agents we need for a certain day and hour, given historical data and other forecasts (e.g. total amount of products sold). This could also give a more fine-grained forecast. For example: given that we know the Kobo e-book reader is going to sell well tomorrow, we need more agents with knowledge about this e-book reader.

References

<1> Nitish Srivastava, Geoffrey E. Hinton, Alex Krizhevsky, Ilya Sutskever, and Ruslan Salakhutdinov. Dropout: a simple way to prevent neural networks from overfitting. Journal of Machine Learning Research, 15(1): 1929–1958, 2014.

<2> Xiang Zhang, Junbo Jake Zhao, and Yann LeCun. Character-level convolutional networks for text classification. In Corinna Cortes, Neil D. Lawrence, Daniel D. Lee, Masashi Sugiyama, and Roman Garnett, editors, Advances in Neural Information Processing Systems 28: Annual Conference on Neural Information Processing Systems 2015, December 7-12, 2015, Montreal, Quebec, Canada, pages 649–657, 2015.

<3> Diederik P. Kingma and Jimmy Ba. Adam: A method for stochastic optimization. CoRR, abs/1412.6980, 2014.

<4> Sergey Ioffe and Christian Szegedy. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Francis R. Bach and David M. Blei, editors, Proceedings of the 32nd International Conference on Machine Learning, ICML 2015, Lille, France, 6-11 July 2015, volume 37 of JMLR Workshop and Conference Proceedings, pages 448–456. JMLR.org, 2015.

<5> Sepp Hochreiter and Jürgen Schmidhuber. Long short-term memory. Neural Computation, 9(8):1735–1780, 1997.