Getting organized: cleaning up bol.com with Data Science

Data Science and Machine Learning are becoming more integrated into current businesses. Especially in e-commerce there is huge potential for predictive modeling. It is therefore no surprise that bol.com has given extra focus on significantly expanding its Data Science efforts the coming year. That’s not to say that there aren’t already some interesting Data Science projects running. In this blog post we will take a look at one of the projects I am currently working on with fellow data scientist Joep Janssen: the chunk project.

Let’s get chunky

Now I hear you thinking: what is a chunk? The answer is quite simple: chunks are nothing more than a custom bol.com product categorization. Each product in our catalogue is assigned to a chunk. Up until now suppliers and plaza partners have always manually assigned a chunk. And in all manual processes errors can and will be made. This means that sometimes you will find a product in the webshop where it does not belong. This is something that we want to prevent happening as this is bad for the customer experience. Here is where Data Science comes in. Can we make a model that can automatically assign a chunk based on product data?

The chunk landscape

To make this project a success there are a few problems that need to be tackled. Like all Data Science projects; good data quality is essential to get meaningful results. And although data quality is in general good, there will always be products where data quality is poor.

Additionally, we have our own chunk landscape to wrestle with. We currently have roughly 6000 chunks and we want to properly predict all of them. That is a challenge in its own right. However, the problem is compounded further when it turns out that many chunks are ambiguous. So ambiguous that a human has a hard time seeing the difference. We have, for instance, 15 different types of spoons. To make matters even worse there are also a lot of chunks that contain 10 products or less. Building a model that performs well on those chunks and is generalizable is nearly impossible.

Because the chunk landscape is this messy a large part of our project team is dedicated to cleaning this up. By merging chunks that are similar and removing chunks that are hardly used we will obtain a chunk landscape that is much more manageable and less convoluted. A cleaner collection of chunks will make our lives also much easier as it will undoubtedly boost the performance of our model.

The truth and nothing but the truth

A more fundamental problem that we cannot ‘easily’ solve is the fact that our labels, our ground truth which we use to train our models on, is intrinsically flawed. We know there are products that are not located on the right chunk, but we don’t know which, let alone how many. Is it 1%, or is it 5%? All we know is that a small unknown fraction of our catalogue is polluted. This is not only noise for our model, but it also makes validation of our model a lot more difficult.

Validation of a model is crucial and in most cases this can be pretty straightforward. You have a validation or test set, which you can apply to the model and then you check how close to the truth the predictions are for that test set. If you get 100% recall and precision you’ll be the happiest data scientist in the world. Right? But what if you are less interested in reproducing the labels and more interested in finding the products that need to be switched to another label altogether? How do we validate these predictions? There is no ground truth in this case, so the only thing remaining is validating by hand.

Bol.com has a catalogue with millions of products, so even 1% change, means tens of thousands of products will change chunks. Nobody is eager to go through thousands of model predictions in order to validate whether the predictions your model produced are actually correct or not. It’s tedious and mind-numbing work at the best of times. So, what do you then? You share the pain with your colleagues.

The Polish Night

The holiday season is incredibly important for just about everyone in retail, including bol.com. It’s the time of the year when people order large amounts of products for their love ones and therefore it’s important that stores manage to draw as many customers as possible. To ensure that bol.com is ready for the holiday season we have a Polish Night at the start of November. A single evening where hundreds of bol.com employees work together to make the store look as good as possible. All while enjoying a beer and some live music.

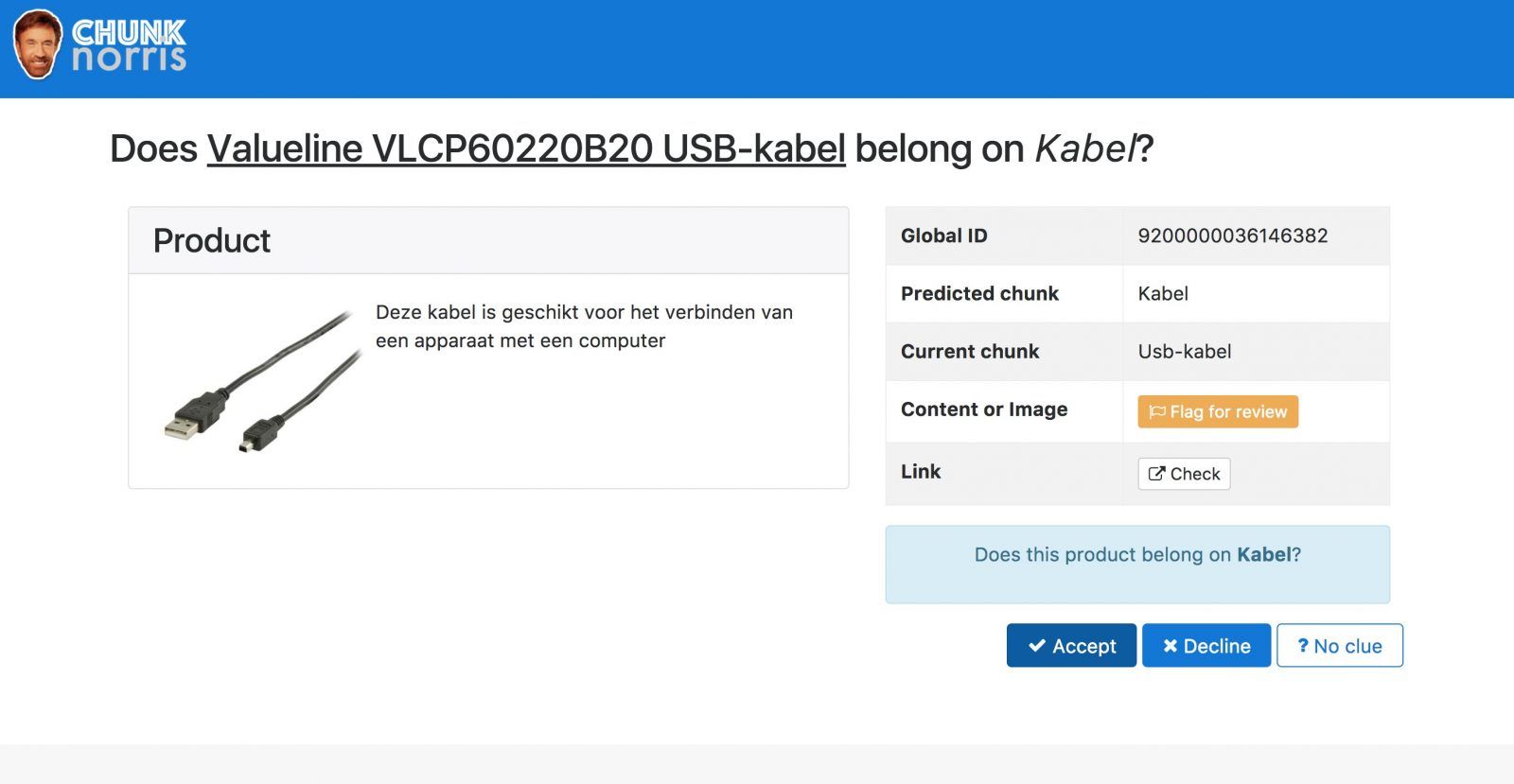

What better moment to test our model than this evening? So we joined forces with one of our POs and fellow machine learning enthusiast Pim Nauts and created a simple user interface called Chunk Norris. Fed by the predictions of our model, Chunk Norris would present users with products that we think are currently on the wrong chunk. The user could then simply say yes, no or no clue. Roughly 25 people focused for 3 hours on validating as many products as possible, leading to more than 26.000 validations out of which well over 12.000 predictions were confirmed to be correct. This not only gave us essential information about our model’s performance, but it’s also a great example of adding value early in the life cycle of a data science project.

What’s next

The Polish Night was a big success for the chunking project, but is definitely not the end of the road. We are still working hard on cleaning up our collection of chunks which in turn will improve our model. And of course, we want to put the Chunk Norris interface to good use so we are developing a service that regularly train a model and performs predictions for all products in the catalogue. Our product specialists can then use Chunk Norris to look at these predictions, clean up the catalogue efficiently and improve the model even further. And before you know it you will never find a bluetooth speaker in between the breadknives anymore!