Coroutine Gotchas – Dispatchers

Exploring the realm of coroutines and mastering the associated concepts can be both a rewarding and challenging experience. We've been there and want to help other developers who encounter the same challenges as we did. This is the second instalment of the Coroutine Gotchas series, which contains articles about the coroutine pitfalls we've experienced. In this blog, we aim to clear up confusion about Dispatchers. This blog post is standalone and does not require you to read previous articles.

Understanding Dispatchers can be tricky if you are new to coroutines and especially to asynchronous programming. No worries! We've investigated it for you, so you don't have to!

Why do you need a dispatcher?

To operate effectively, every coroutine relies on specific components that collectively form the Coroutine Context. A coroutine context has elements like a Job that handles the lifecycle, a coroutine name for debugging, an exception handler, and a dispatcher. We'll explore the details shortly, but in a nutshell, the dispatcher is the element that determines which thread pools will be used for the given coroutine and handles the execution of the coroutine.

Now, let's dive into a developer's story to see how using the correct dispatchers can help us improve our daily work. Meet Tracey Codeberg, a highly enthusiastic developer who takes over a Kotlin application that responds quite slowly. As she starts investigating the cause, she discovers a piece of code making 100 calls to a fake API for some reason:

fun fetchData(): Long = measureTimeMillis {

val restTemplate = RestTemplate()

val url = "https://jsonplaceholder.typicode.com/posts/1"

measureTimeMillis {

List(100) {

restTemplate.getForObject(url, String::class.java)

}

}

}

This takes approximately 2,7 seconds to finish, quite a latency to collect some data. In this example, every RestTemplate call blocks the thread it is running on until the call returns some data and then hands over the thread to the next RestTemplate call. It works like this because RestTemplate, by nature, is a synchronous, blocking operation. If you want to know how blocking and non-blocking operations work, there is a detailed explanation here.

After learning this, Tracey decides that she can make use of some of the idle threads on her system to make multiple blocking calls at the same time. It is good to clear out a misconception here: this is called parallelism, not concurrency.

GOTCHA: Parallelism and concurrency are not the same thing

Even if this is not a coroutine specific topic, there is a common misconception regarding parallelism and concurrency. These terms are often used interchangeably, but they represent distinct concepts.

Let's take a brief journey into the realms of sequential blocking, concurrency, and parallelism by imagining a bustling office scenario, where a diligent clerk is actively assisting clients.

Sequential/Blocking (No Concurrency or Parallelism):

In our imaginary office, this behavior would be seen as the clerk helping one client at a time. While the first client fills in the form, the clerk waits and doesn't serve any other client.

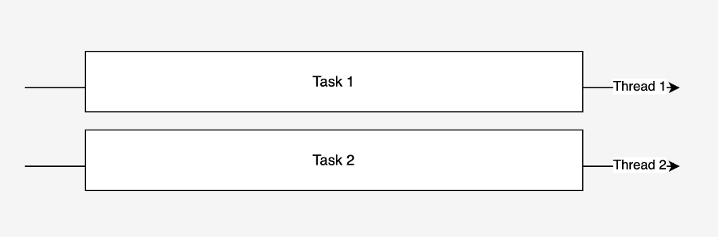

A single-threaded program works in a sequential/blocking manner, where tasks are executed one after another without any concurrent or parallel execution.

Parallelism:

This involves the clerk calling in a colleague to help. Now, both clients are being served simultaneously by different clerks. Each clerk works independently on their assigned task, leading to true parallel execution.

In a multi-process or multi-threaded program, tasks are genuinely executed simultaneously on multiple processors or cores. For example, two RestTemplate calls can be made at the same time, but they will use and block different threads, not sharing their resources.

Concurrency:

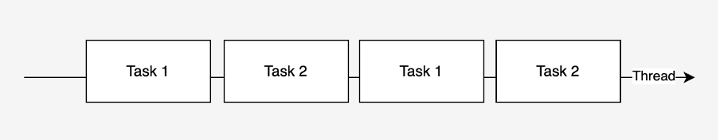

This time, the clerk opts for a more efficient approach by utilizing the time when the first client is filling out the form to help another client. The clerk can seamlessly switch back and forth between the two clients, managing different tasks concurrently. This doesn't necessarily imply that both tasks are progressing simultaneously, but there's an overlap in their execution.

In an asynchronous program, tasks are executed independently, and the program orchestrates the switching between tasks to create the illusion of simultaneous progress. With this approach, you eliminate idle threads, maximizing resource utilization. An alternative non-blocking and asynchronous HTTP client, known as Webclient, exemplifies this concept. If you make two WebClient calls using one thread, they will share the thread, preventing it from being blocked until the calls return an answer.

Using non-blocking libraries helps with resource utilization because no thread is wasted; they can be used concurrently. Check this article for more details.

Okay, first gotcha out of the way! Now, how does Tracey use this information? For her initial attempt, she recognizes the need to make parallel calls due to RestTemplate being blocking. She searches for a way to achieve this in Kotlin and decides to give coroutines a try. With the program below, she hopes to make 100 calls in parallel:

measureTimeMillis {

runBlocking {

val jobs = List(100) {

async { restTemplate.getForObject(url, String::class.java) }

}

jobs.awaitAll()

}

}

Well, it's unfortunate that she is using runBlocking since we wrote a whole article about how it's a bad idea, but let's cut her some slack since she is still learning.

She runs the code and realizes that the amount of time is not changing much, still around 2,7 seconds. This is weird because, with these calls made in parallel, the application should wait significantly less. She starts debugging to see how her threads are working:

val timeInMillis = measureTimeMillis {

runBlocking {

val jobs = List(100) {

println("Thread name: ${Thread.currentThread().name}")

async { restTemplate.getForObject(url, String::class.java) }

}

jobs.awaitAll()

}

}

println("Time in millis $timeInMillis")

Output:

...

Thread name: main

Thread name: main

Thread name: main

Time in millis 2749

Wow, it seems like there are no parallel calls are being made, it is all still serial and in fact it all runs on main thread. Surprised, she dives back into the documentation and discovers (drum roll) ...

GOTCHA! runBlocking does not provide parallelism out of box:

By default, runBlocking uses an EmptyCoroutineContext and blocks the calling thread in this case main thread. You need to specify a dispatcher to use a thread pool so that your operations can run on different threads without blocking the calling thread. This also means that no parallelism is provided out of the box if no dispatchers are provided.

Wait a minute, we also learned about non-blocking WebClient calls. How would they perform? As the first gotcha explains, WebClient works concurrently. Therefore, every call made will yield the underlying thread when waiting for its call to be done. This way, all the calls will be done without fully waiting for each other to finish. This means that you can make use of your system more effectively and get faster results!

Now, back to Tracey...

After realizing that runBlocking does not provide parallelism out of the box, Tracey decides to use the first dispatcher she sees: Dispatchers.Default. Frankly, if it wasn't good to use everywhere, why would it be called default?

measureTimeMillis {

runBlocking(Dispatchers.Default) {

val jobs = List(100) {

println("Thread name: ${Thread.currentThread().name}")

async { restTemplate.getForObject(url, String::class.java) }

}

jobs.awaitAll()

}

}

println("Time in millis $timeInMillis")

Output:

Thread name: DefaultDispatcher-worker-1

Thread name: DefaultDispatcher-worker-6

Thread name: DefaultDispatcher-worker-5

Thread name: DefaultDispatcher-worker-7

Time in millis 1114

Okay, that made some difference. Why? Instead of executing the operation on the main thread, the default dispatcher delegates it to a thread pool whose size corresponds to the number of CPUs, ensuring a minimum of two threads.

Happy with the improvement, she keeps reading to find out how she can implement things better. She is making 100 parallel calls using blocking RestTemplate. Determined to make her system perform better, she decides to try out Webclient. With WebClient, she can make concurrent calls that utilize threads more effectively, positively influencing system performance. She can also suspend her endpoint and get rid of the runBlocking that is calling the coroutines.

private val webClient = WebClient.create("https://jsonplaceholder.typicode.com")

suspend fun fetchData(): Long = measureTimeMillis {

withContext(Dispatchers.Default) {

List(100) {

async {

webClient.get()

.uri("/posts/1")

.retrieve()

.bodyToMono(String::class.java)

.awaitSingleOrNull() ?: "Error: Unable to fetch data."

}

}.awaitAll()

}

}

Calling this suspendable, non-blocking function takes about 50 milliseconds, even with the default dispatcher’s small thread pool! Well done, Tracey!

Satisfied with the results, Tracey begins to investigate how she can transform her entire codebase to be non-blocking, using WebClient instead of RestTemplate, R2DBC instead of JDBC... Basically, moving everything to reactive libraries and frameworks. She realizes that it is quite a project, and reactive code can be challenging and hard to debug. She decides to put this idea on hold and find a way to keep her simple blocking codebase while benefiting from coroutines.

She goes back to basics of dispatchers, and voila! There are other types of dispatchers. She reads that Dispatchers.Default is the dispatcher used for non-blocking operations, but what about blocking operations? Do you hear the drumrolls coming?

GOTCHA! Dispatchers.IO is for blocking operations while Dispatchers.Default is for non-blocking operations

Dispatchers.IO is used for blocking IO operations such as disk read/writes, database read/writes or synchronous network calls. These operations generally take longer to finish and block the thread that they are running on. Due to this, thread pool of Dispatchers.IO is larger than the Default dispatcher’s thread pool. Dispatchers.IO has 64 threads, and if the system has more cores than 64 then it can also have more threads. (We wish!)

Meanwhile, the default dispatcher is mainly used for the tasks that are CPU-bound and non-blocking. If you want to learn the difference between CPU and IO-bound tasks, you can check it here. In simple terms, if you have tasks that are CPU-bound and non-blocking, you can use Default dispatcher to execute them concurrently. It is also the default dispatcher for the coroutine builders if no dispatchers are specified. If your tasks are blocking and IO-bound, then you will need Dispatchers.IO and use a larger thread pool to be safe.

measureTimeMillis {

withContext(Dispatchers.IO) {

val jobs = List(100) {

async {

restTemplate.getForObject(url, String::class.java)

}

}

jobs.awaitAll()

}

This code takes around 293 milliseconds to run, even though she uses RestTemplate to make the calls. It is quite good! Being an ambitious developer, Tracey decides to shoot for the stars and investigate if she can make things even better. How about optimizing the threads themselves? Blocking the threads are expensive and there is only limited amount of them.

You heard about new and shiny Project Loom, right? Well, Tracey heard it too! She comes across Project Loom all the time and learns about how revolutionary virtual threads are. This way she doesn’t need to waste her real threads being blocked by waiting HTTP calls. Can she use virtual threads to optimize the performance of blocking calls?

GOTCHA! Keep your blocking operations as they are and leverage virtual threads from Project Loom

Instead of using and blocking real threads, you can use virtual threads from Project Loom. This way, you do not need to resort to complex reactive solutions with coroutines. Simply create your own dispatcher that uses virtual threads and enjoy!

Tracey creates her own Loom dispatcher as follows:

val LoomDispatcher = Executors

.newVirtualThreadPerTaskExecutor()

.asCoroutineDispatcher()

val Dispatchers. Loom: Coroutine Dispatcher

get() = LoomDispatcher

Then she uses this dispatcher to make 100 blocking calls, the same way she used the other dispatchers:

suspend fun fetchData(): Long = measureTimeMillis {

withContext(Dispatchers.Loom) {

val jobs = List(100) {

async {

restTemplate.getForObject(url, String::class.java)

}

}

jobs.awaitAll()

}

}

}

Output: 82 ms

Well, that is some difference but not that dramatic. Mind you, this example is doing bunch of parallel calls to a test API and they limit the calls that can come in at the same time. Therefore, it slows down the calls by nature. To see the dramatic difference between virtual threads and IO threads, Tracey re-writes this example with another blocking operation “Thread.sleep”. The example launches 1000 threads that blocks the underlying thread for 1000 milliseconds.

runBlocking {

val time = measureTimeMillis {

coroutineScope {

repeat(1000) {

launch(Dispatchers.IO) {

Thread.sleep(1000)

}

}

}

}

println("Dispatcher.IO blocking real threads: $time ms")

}

Output:

Dispatcher.IO blocking real threads: 16235 msNow it is time for her to run the same example using the Loom threads she created before. This will not block any physical threads but will use the virtual thread pool and those threads will be blocked for 1000 milliseconds just like the above example:

runBlocking {

val time = measureTimeMillis {

coroutineScope {

repeat(1000) {

launch(Dispatchers.Loom) {

Thread.sleep(1000)

}

}

}

}

println("Using a Dispatcher that uses Loom virtual threads: $time ms")

}

Output:

Using a Dispatcher that uses Loom virtual threads: 1095 msFrom 16 seconds to 1 second! That is an amazing difference! With Loom, developers can run their blocking operations using virtual threads, and this might even be the end of the reactive programming era! What do you think? Virtual threads bring up all new possibilities for developers, and we are here for it! You can read further on the performance analysis of this comparison here.

With that, we come to the end of Tracey’s story. She started with her blocking HTTP calls taking too much time and found out that she can use reactive libraries and frameworks to make her operations non-blocking. For these operations, Dispatchers.Default performs great since they do not hold up the underlying threads. However, transforming all her code base to reactive counterparts was quite complex, so she stuck with her blocking code and started using Dispatchers.IO since it has a larger thread pool. And then she discovered the magic of Project Loom, created her own thread pool with virtual threads, and used it to make her blocking calls.

But wait, there is one more dispatcher that we should know:

Dispatchers.Unconfined

Now that we are familiar with using dispatchers, let's explore the Unconfined dispatcher and observe its effects.

runBlocking {

launch(Dispatchers.Unconfined) {

println("starting thread: ${Thread.currentThread().name}")

delay(100)

println("ending thread: ${Thread.currentThread().name}")

}

}

Output:

starting thread: main

ending thread: kotlinx.coroutines.DefaultExecutor

This behaviour is quite peculiar! As you can see, the coroutine starts in the main thread and then gets dispatched to another thread after calling the delay function.

GOTCHA! Be careful with Dispatchers.Unconfined

Dispatchers.Unconfined differs from its predecessors in that it avoids altering any threads. Upon initiation, it operates on the same thread from which it was started, and when resumed, it continues on the resuming thread. From a performance standpoint, this dispatcher is the most economical since it eliminates the need for thread switching. Consequently, it might seem a viable choice when thread specificity is of no concern. However, caution is warranted! It has really limited use cases (mostly seen in testing so far) and can be dangerous to use in production code.

As a rule of thumb, the Unconfined dispatcher should not be used with coroutines that are CPU-bound or updating shared data. A coroutine that uses the Unconfined dispatcher can potentially run on any thread during its lifecycle. This lack of thread confinement can lead to unpredictable change of operations and potential race conditions, especially in scenarios involving shared mutable data. IO or Default dispatchers provide a dedicated thread pool and better control over the execution context, reducing the likelihood of race conditions and improving predictability.

Conclusion

With this article, you now know where to use specific dispatchers. Dispatchers.Default is for non-blocking operations, meanwhile, Dispatchers.IO can be used for blocking operations. You can even use Project Loom to unblock your operating system threads. And make sure that you use a dispatcher if you are using `runBlocking` to call your coroutines. There is a bonus dispatcher called Unconfined which has quite limited use cases, so be very careful to use it; you might end up with behaviours you did not expect. Better stick to the safe alternatives like Default or IO dispatchers.

This was the second chapter of our “Coroutine Gotchas” series, hope you enjoyed!