Big, bigger, scalable

At bol.com we service millions of products to millions of customers, resulting in billions of pages each year. We want to create the most effective service to our visitors we possibly can. So we spent a lot of time preparing the content, monitoring the systems and analyzing how we can support our customers better in finding what they really want. In some cases, like our recommender system and personalization solutions, we need to analyze a lot of data to get to the required insights.

Our analyses are so big that no single computer can store the data or answer our questions within an acceptable time span. So in 2008 we started experimenting and doing things differently. After having adapted some of our computations to handle any size, we put our first Hadoop job in production in 2010.

This blog is about the lessons we learned, the pitfalls we spotted and the concepts we use to design all of our data-driven applications.

Types of scalability

In essence there are two types of scalability:

- Vertical: Run everything on a single machine and simply buy a bigger one if needed. This has the limitation that at some point even the biggest single machine isn't big enough for the task at hand.

- Horizontal: Run the work on many (normal-sized) machines and simply buy more if needed. This requires designing everything related to running this job to support this model.

At bol.com our data analysis needs have long outgrown vertical scalability, as no computer is big enough to meet our demands. That is why we rely on horizontal scalability.

The basic trick of horizontal scalability is that you split the work into independent parts and then let each part be processed in isolation by an independent worker. The only overhead (if done right) is in the distribution of the work and at the end the gathering of the multiple parts of the result.

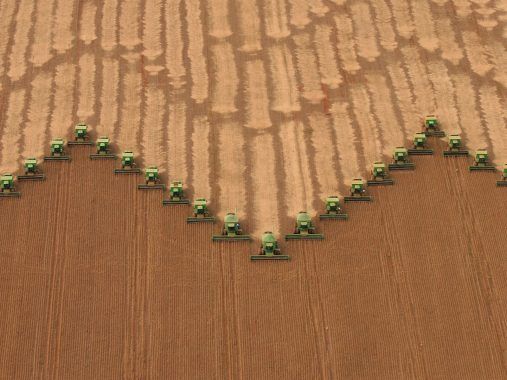

A very clear example of this concept is how soybeans are harvested. Each harvester is an independent worker who has the job of harvesting a specific band in a field.

Making sure an IT system can be run in a horizontally scalable way is harder than most people think. I’ve read many blogs with statements about running ‘BigData’ solutions that are simply incorrect. As a consequence, many have become disappointed in BigData because they never got what they expected (or what had been promised).

Let me explain the concepts we use at bol.com and how we use them when designing new data-intensive applications.

Types of ‘Big’ applications

In general, you’ll see that there are three types of workloads that need distribution across multiple machines:

Work that requires lots of

- CPU time

- Data

- A combination of the two (so both CPU time and data).

In the late 1990s, while working for the National Aerospace Laboratory, I wrote a system to sequence aircraft on the runway to optimize runway utilization and safety (MANTEA paper). This system had an input of only a few kilobytes, while running used a few megabytes of RAM. All it really did was spend many hours of CPU time to find the best solution in a search space with more elements than there are atoms in our planet. This problem was easy to run in a distributed way and required almost no data storage. It was a real in-memory CPU-bound solution.

At bol.com the click logs from our website are many GiBs per day and require a lot of storage (even with gzip compression). The above mentioned in-memory idea won’t work here anymore simply because the size of only a few weeks of data is already too big for any single system to handle. The processing needs fast access to the data stored on some type of persistent storage solution (i.e. disks). At our current scale everything becomes a bottleneck: storage, processing, communication. To handle this effectively we need to design our systems to run at scale.

Designing for scalability

In order to run a workload in a horizontally scalable way we must make sure the following components are all horizontally scalable:

- The hardware

- The processing infrastructure

- The data structure

- The algorithms

In general, we work under the design assumption that our setup must be able to scale to a cluster of thousands of computers doing a calculation on petabytes of data. Even though bol.com is not (yet) at that scale, designing for that scale does have advantages:

- The solutions usually perform a lot better.

- The solutions usually have less operational issues.

- We need to spent less time on maintenance and/or redesign because we can classify the 'size' problem as 'Done'. We simply don’t have to worry about the growth of the systems anymore.

Scalable hardware

When designing the hardware setup of a cluster, the key concept that guides most choices is that of ‘Data locality’. This is a design concept that is essential for large-scale data-intensive systems.

Data locality is the idea that the data that will perform the work is stored locally on the computer. The data is stored on the same systems that do the processing. During a job submission the management systems try to choose the system that has the required data on one of its disks to do the processing. The effect is that the data transfer between machines is kept to a minimum during the job.

Also, one of the slowest parts in a computer is the head of the hard disk. Moving it to the right location takes a few milliseconds. When several applications all compete for the same disk head, the system will wait a significant amount of time for the hard drive head to move to the right location. To minimize this effect, we have opted for processing computers with roughly the same number of CPU cores as hard disks. This means we can use cheaper hard disks that pack more GiBs of storage. Using SSDs is a way to make this point less important. Yet a few years ago (when we purchased our cluster hardware) the storage size, price and reliability factors of SSDs hadn't reached their current level.

I have seen blogs and presentations by vendors of both cloud and SAN/NAS type solutions that get this essential point wrong. They claim that having storage decoupled from the processing is a good idea. While this may work fine in a lot of scenarios, it is a vertically scalable setup. Where local storage has a private connection between disk and cpu (within the computer), using a SAN/NAS type solution introduces a shared (network) connection. At some point this connectivity between storage and processing will become the bottleneck. The combined IO speed of many local hard disks is significantly larger than any interconnecting network. So limiting the need to transport data over the network is a crucial requirement for large-scale systems. This point is also explicitly mentioned in the 2004 slides accompanying the Google MapReduce paper (see slide 27).

Scalable processing infrastructure

When building an application that needs to run on many computers, one will have to face the problem of handling failure efficiently. This problem not only applies to the data storage (on local disks) but also to the workload distribution. The essence of the problem is that in a cluster of many machines the mean time between any two failures has a really short time span (hours or even minutes). The ways in which Google handles this covers much of the historical MapReduce paper from Google.

Today, the open-source arena offers several systems that solve the various parts of this problem. Well-known systems (many of them from the Apache Software Foundation) are:

- Hadoop Yarn: Distributed local storage and resource management

- Hadoop MapReduce: Single step batch processing

- Tez: Multi step batch processing

- Spark: Multi step batch processing

- Spark Streaming: Micro batch processing

- Flink: Stream processing

Thinking about the data flow at scale means you think about all the moving parts of the entire application. Systems like MapReduce persist every intermediate step to disk. Newer systems like Tez, Spark and Flink try to keep as much in memory as possible and combine several steps into a single process. In for example Apache Pig (a batch dataflow language) an important part of the system is the optimizer that restructures the steps to optimize the throughput.

Some systems like Apache Spark, Tez and Flink use memory to increase the performance. Yet I have found that many solutions that pride themselves on being ‘in memory’ are actually vertically scalable solutions. They are limited in that they only work if all the data fits into the memory of a single server. If the advised hardware setup is a single server with 1 TiB of RAM, you can be sure it is vertically scalable.

Scalable data structures

Most people designing and building software systems have been taught to use an entity relational model for the data. While this normalized model is fine for modeling the data, it's quite unsuitable when storing the data for scalable processing. Doing a ‘join’ between a two or more data sets (tables) means that in general all data can be related to all other data. This makes it very hard, if not impossible, to split the data into independent chunks to be distributed across a cluster.

At bol.com we store a lot of the data on our clusters in a de-normalized form: a single table (or file) with a lot of columns. All relations are made part of the record, thus duplicating a lot of data.

Yes, we're actually making the data set bigger in order to process it faster.

A good example of this performance increase is the CSV file of our product catalog for external partners. Several years ago, when our catalog held about 10 million products, it took our relational solution several hours to create the file. Our current solution needs less than 15 minutes, even though our catalog now holds about 50 million products. This solution uses HBase for a storage system and has all related information in the same row.

The fact that there are several SQL solutions available that run on distributed clusters (Hive, Drill, Phoenix, SparkSQL and others) does not mean that doing a join suddenly scales very well. These systems do have a lot of value but it is important to understand the way they work.

At one point, someone did a PoC by putting their relational data into the cloud. There they ran the exact same join on these data sets and they were wondering why it didn’t give the performance gain they expected. Now you know why.

Scalable algorithms

We have the data split into parts that can be processed independently and now we need to do a calculation. In general, for calculations to be done in a scalable way, they need to have both the associative and commutative properties. Simply put: we can split the data into arbitrary chunks, do a calculation on each chunk independently and finally combine all partial results to obtain the requested answer.

In some cases, this is easy: 1+2+3+4 can be calculated as (1) + (2+4) + (3) and also as (1+4) + (3+2).

Calculating the average is a simple extension to this idea. For each subset we sum the numbers and we count them. In the end we divide the two to get the average. Without going into detail similar tricks are available for operations like min, max, variance/standard deviation and many others.

Yet in many cases this calculation cannot be done in a scalable way.

Take for example the simple business question:

How many visitors were on the website last year?

At the data level we need to do a count distinct operation on something like a customer ID or session ID. The simple approach is creating a set in memory, loading all the values and then retrieving the size of this set. This works, but is limited to the size of the memory of a single computer and forces the entire processing onto a single CPU. In order to solve this, we need to step into the arena of data structures that estimate the requested value: ‘Probabilistic data structures‘ or ‘sketches’.

For our problem of doing the ‘count distinct‘ we find the HyperLogLog algorithm to be one of the best known solutions for large-scale.

Another common question we find (often in operational monitoring) is that of the percentile: below which threshold do we find 99% of the observations?

The algorithm is simple: sort (and count) all values and then extract the value at the requested percentile. The snag is that this also requires all values to be moved to a single server. One of the very usable estimating implementations of this problem is T-Digest (Pig UDF). T-Digest creates a small probabilistic data structure that estimates ‘all’ quantiles for that subset and allows merging multiple of those data structures into a combined data structure.

Considerations

Most of what I've written up to this point is on a conceptual level. But I'd like to share what we have learned over the years doing this for real. So here they are, our additional considerations that may help when you actually start designing your own applications:

Plan for the future early

- Switching the design concept is very, very expensive. Going from relational data structures in an RDBMS to a non-relational solution in a cluster is really hard. So starting right away with 'large-scale' as a design goal is a good idea.

- How big is your company going to be in 5 years? If you are a start-up then I really recommend to design and build everything from the start to scale really well.

- In case you cut corners today and run into a wall in the future: which parts of the application are easy to replace and which are hard to replace? Changing the cluster on which the system runs (with or without data locality) is probably easier than changing the underlying data structures.

- Designing to scale horizontally does not mean you need to have a cluster of many servers to run your application on. At bol.com, in 2010, we went to production with our first Hadoop MapReduce application. At that time we did not have a cluster yet. So for the first 6 months we ran our application on a single server, limiting the processing power to using only a week of click data.

It is really different

- Training people to effectively design solutions that scale horizontally takes time. Let them experiment and benchmark their ideas. Because the effects of the design choices are so great, people will have to experience them first hand. Also give them an incentive to make it more efficient. Someone once told me it doesn't matter that his application is inefficient because the cluster is big enough anyway ...

- Some questions can only be estimated at large scale.

- Help your business understand these basics so they can ask better questions.

Be critical of your choices

- If you are really sure you can run it on a single machine 'forever' then a solution that doesn't scale horizontally is fine.

- Any system can perform well at small-scale. When selecting an off-the-shelf solution actually test it at the scale you plan to operate it on in the next few years. There are too many Hadoop enabled systems that don't scale well. So actually give it a dataset of a few TiB and measure the performance.

Conclusions

In this article I have described how bol.com has been designing its data-intensive applications over the last few years. Making a data application at this scale really work efficiently requires designing the entire stack to scale horizontally. If one of these elements is not perfect the entire application will not scale as expected.

And even if you don’t need the scalability right away, just think about it anyway.